Half way through an Azure migration - at a stability point - with 50% of services in Azure and 50% on prem we started seeing these errors from the on prem software :

SQLSTATE[HY000] Unable to connect: Adaptive Server is unavailable or does not exist (severity 9)SQLSTATE[HY000]: General error: 20047 DBPROCESS is dead or not enabled [20047] (severity 1)com.microsoft.sqlserver.jdbc.SQLServerException: Read timed outLooking first at a packet capture locally, on prem, where it was easy to get, a pattern was found that showed a particular TCP stream that had worked for hours would just stop with retransmits (and the inevitable timeout)

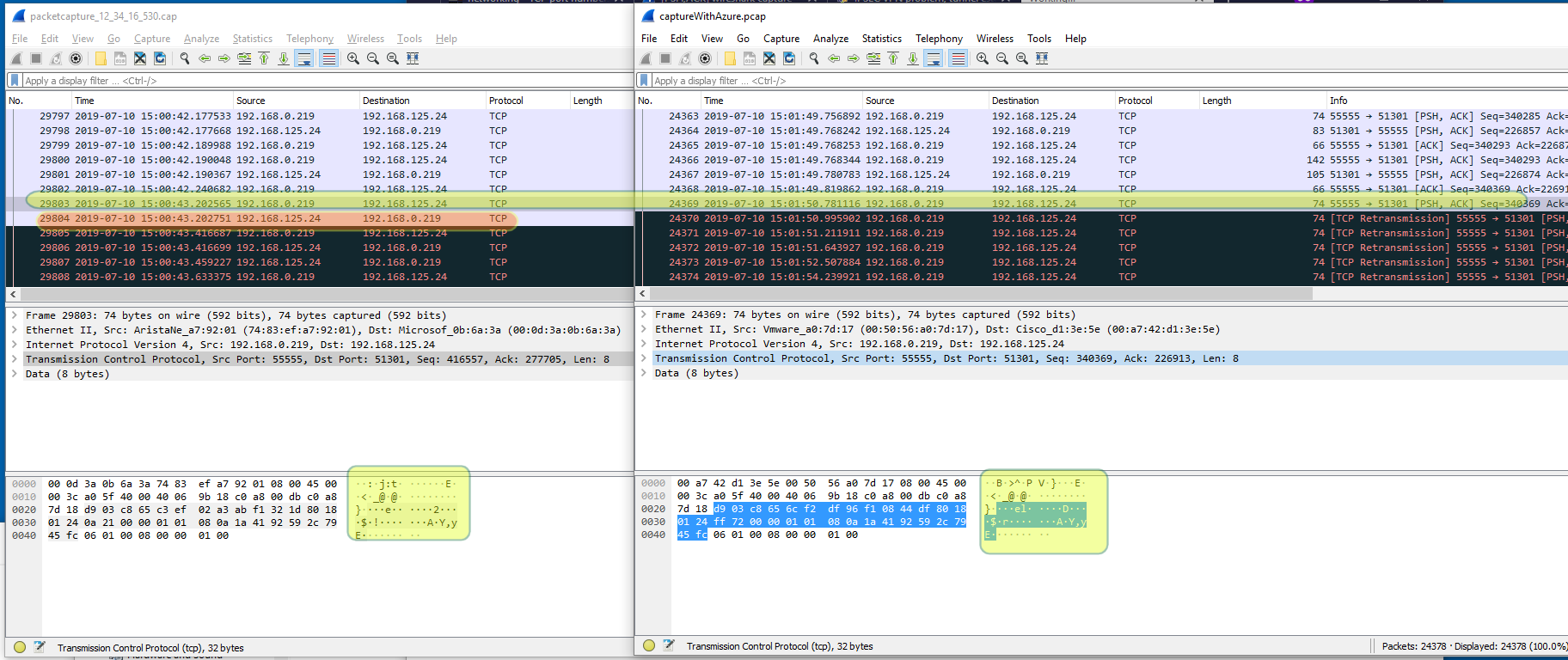

The obvious thing next seemed to get a view from the remote (Azure) end. Below are the side by side caputures, left from the ethernet interface of an Azure MSSQL server and on the right of the ethernet interface of the on prem LAMP.

Yellow highlight shows the captures lined up, with evidence.

Red highlight shows the packet we expect back, but don't get

Notice that the flow becomes unidirectional at this point. On prem LAMP keeps retransmitting it's last packet, but never receives the retransmits of the Azure reply. If it did. No Care.

Not shown in the capture is a the final packet where Azure RSTs the stream since it's not had a response within the allocated time. The RST arrives at the on prem at 15:02:18, it is the first packet since 24367 that has arrives from Azure.

The problem clearly isn't with the LAMP or MSSQL ends.

There is a Load Balancer that Implements an Availability Group Listener and a Cisco ASA (I think it's a 5500) and the internet in between.

As a test an OpenVPN VPN was brought up from inside Azure to the on prem firewall and routing adjusted (sadly some NAT was necessary at the Azure end since by now this was a production set up).

The test now did not fail for 7hrs+